Notes

- Permalink

Proxmox VE 9.1, new release. The most important part of this new release is definitely the support for OCI images, getting closer and closer to a Docker-style workload. But what does this actually mean?

To run containers in Proxmox before, you first had to create an LXC container, install Docker, and only then would you finally be able to run the container. With this new release, Proxmox allows you to query and pull directly from the Registry the image you need, unpack it, and then convert it into a disk image that LXC can use. It’s as if it uses them as templates for LXC containers. So, it isn’t native support in the strict sense: Proxmox continues to use LXC, this is simply a convenience feature to allow generating an LXC from an OCI image.

What I’m wondering is how the update of these images is handled. I’ve read some posts and watched a few videos, but it seems Proxmox hasn’t gone quite this far yet.

- Permalink

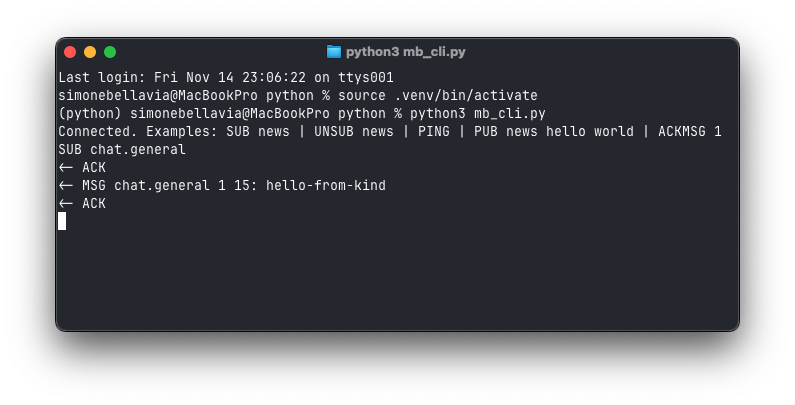

Just playing with my new side project.

- Permalink

GLM 4.6. It’s Z.ai new flagship model. It is an update to GLM-4.5 and reaches near parity with Claude Sonnet 4.

Major improvement is the longer context window, expanded from 128K to 200K. It also shows significant improvements in coding. Z.ai aims to make it the best agentic coding model.

It is available for Claude Code, Opencode, Cline, and many others. Their Lite Plan, costing $3/month, provides up to 120 prompts every 5 hours, which should be about 3x the usage quota of the Claude Pro plan. I’m gonna try it today.

As I did for previous LLM releases on this blog, I won’t report the benchmarks but you can consult them on their official blog announcement.

- Permalink

Lot of interesting stuff, I don’t know where to start!

I’ll start from DeepSeek, since I am a stan.

DeepSeek-V3.2-Exp. It’s a new model built on V3.1-Terminus, and it introduces DeepSeek Sparse Attention, which is a new architecture module that enables fine-grained sparse attention, selecting top-k key-value entries for each query using efficient FP8 operations and lightning indexer. It was built by training five RL-specialized models (math, competitive programming, agentic coding, logical reasoning, and agentic search) using GRPO, then distilling them into the final 685B-parameter model. It comes with a 6-page paper which does not seem to be really specific, but it really seems that they are silently cooking and figured something out. Anyway, 10x cheaper inference at 128k tokens, with API prices cut by 50%+. They insist on it being experimental. Matches V3.1-Terminus on most benchmarks, but shows slight degradation in reasoning-heavy tasks like GPQA due to generating fewer reasoning tokens. It seems they cracked cheap, long context for LLMs. I’ll try to write more on the paper when I have time.

Claude 4.5 Sonnet – boring stuff? Anthropic made a bold statement about their latest model: the world’s best coding model. I’m not a fan of benchmarks, it just seems to be superior of Opus 4.1, Sonnet 4, GPT-5-Codex, GPT-5 and Gemini 2.5 Pro on a lot of benchmarks. My personal highlights: extended thinking mode, which allows sustained focus on multi-step tasks for over 30 hours; it’s trained on a proprietary dataset mix including public internet data (up to July 2025). Post-training uses RL from human and AI feedback.

Bonus: LoRA without regret. New blog post from Thinking Machines that experimentally shows that LoRA fine-tuning matches full fine-tuning’s sample and compute efficiency for post-training on smaller datasets when using high ranks and applying it to all layers (especially MLPs). Worth reading.

- Permalink

Qwen3-Max has been released from Qwen team. It’s their largest and most advanced large language model to date. It competes against GPT-5 and Grok 4.

The base model has over 1 trillion parameters and was pretrained on 36 trillion tokens. Its architecture seems to follow the same of other models from Qwen3 series: it provides a highly optimized MoE design, which activates only a subset of parameters per inference. This is something we’ve already seen with Qwen3-Next models, form which I think it inherits the same context window also.

The thinking variant, Qwen3-Max-Thinking, it is equipped with tool use and they say it’s deployed in heavy mode. It’s unclear to me what do they mean with it: perhaps they give it way more computational resources compared to the non-thinking variant.

They are taking the core architecture and maxxioptimizing it to reduce costs and improve efficiency. It’s impressive to me.

In the last 12 hours, Qwen has released:

- Qwen3-Max

- Qwen3-VL-235B-A22B: most powerful vision-language model in the series

- Upgrade to Qwen3-Coder: improved terminal tasks, safer code gen

- Qwen3Guard: safety moderation series for real-time AI content filtering

- Personal AI Travel Designer: new feature in Qwen Chat for personalized trip planning

- Qwen3-LiveTranslate-Flash: low-latency live translation model for real-time audio/text

While Qwen is continuing to optimize and release new models, I’ll wait for DeepSeek. I’m convinced they are cooking.

- Permalink

Go has added Valgrind support. While reading the commit, I saw this:

Instead of adding the Valgrind headers to the tree, and using cgo to call the various Valgrind client request macros, we just add an assembly function which emits the necessary instructions to trigger client requests.

This is super interesting. Let’s have a quick look at the code:

// Copyright 2025 The Go Authors. All rights reserved. // Use of this source code is governed by a BSD-style // license that can be found in the LICENSE file. //go:build valgrind && linux #include "textflag.h" // Instead of using cgo and using the Valgrind macros, we just emit the special client request // assembly ourselves. The client request mechanism is basically the same across all architectures, // with the notable difference being the special preamble that lets Valgrind know we want to do // a client request. // // The form of the VALGRIND_DO_CLIENT_REQUEST macro assembly can be found in the valgrind/valgrind.h // header file [0]. // // [0] https://sourceware.org/git/?p=valgrind.git;a=blob;f=include/valgrind.h.in;h=f1710924aa7372e7b7e2abfbf7366a2286e33d2d;hb=HEAD // func valgrindClientRequest(uintptr, uintptr, uintptr, uintptr, uintptr, uintptr) (ret uintptr) TEXT runtime·valgrindClientRequest(SB), NOSPLIT, $0-56 // Load the address of the first of the (contiguous) arguments into AX. LEAQ args+0(FP), AX // Zero DX, since some requests may not populate it. XORL DX, DX // Emit the special preabmle. ROLQ $3, DI; ROLQ $13, DI ROLQ $61, DI; ROLQ $51, DI // "Execute" the client request. XCHGQ BX, BX // Copy the result out of DX. MOVQ DX, ret+48(FP) RETThis is the amd64 assembly for the Valgrind client request. This asm emits the exact instruction sequence that Valgrind’s macro

VALGRIND_DO_CLIENT_REQUESTwould have produced in C, just without cgo.On arm64, the same idea is implemented with different registers and the AArch64 “marker” Valgrind looks for.

It’s nice because they do everything on the language itself, even when relying on assembly. Some reasons I could imagine they do it this way: to avoid cgo and keep the runtime pure-Go, but most importantly control.

Really interesting for me that Go team decided to follow this route. Also, I’m not a fan of cgo.

- Permalink

A lot of activities from Qwen team, and DeepSeek is starting to go out from their temporary stealth mode. Following is a summary with the updates that I found most interesting from them.

Qwen models

- Qwen3-Omni, a 30B multimodal model that supports text, audio, images and video. MoE-based Thinker–Talker design with AuT pretraining for strong general representations, plus a multi-codebook design that drives latency to a minimum. It seems to think a lot! BF16 Instruct model is 78.85 GB. This model replaces the previous Qwen2.5-Omni.

- Qwen3-Next-80B-A3B-Instruct-FP8 and Qwen3-Next-80B-A3B-Thinking-FP8 FP8 quantized versions. Official ones.

DeepSeek updates to V3.1

DeepSeek released DeepSeek-v3.1-Terminus, an updated version of their V3.1 model. What’s improved? Language consistency: fewer CN/EN mix-ups & no more random chars. Also, it seems to be improved in agentic tool use.

Exciting times.

- Permalink

xAI releases Grok 4 Fast. It uses the same architecture as Grok 4 but incorporates efficiency improvements from training data and reinforcement learning. It supports a 2 million token context window and unified weights handle both chain-of-thought reasoning and direct responses, controlled by prompts.

Also, it’s cheap! 47x cheaper than Grok 4. I think it’s cheaper compared to the average, with a price of 0.20$ per input million tokens and 0.50$ per output million tokens. These prices refer to requests under 128k.

- Permalink

New model in town! GPT-5-Codex is a version of GPT-5 specifically realized for agentic coding in Codex. Here’s what you need to know:

- It dynamically adapts its thinking based on the complexity of the task.

- Adheres to AGENTS.md instructions.

- It has been trained specifically for conducting code reviews and finding critical flaws.

- GPT-5 and GPT-5-Codex achieve comparable accuracy on SWE-bench Verified (72.8% vs. 74.5%), but GPT-5-Codex shows a clear advantage in code refactoring tasks (51.3% vs. 33.9%).

- OpenAI found that comments by GPT‑5-Codex are less likely to be incorrect or unimportant: GPT-5-Codex produces fewer incorrect comments (4.4% vs. 13.7%) and more high-impact comments (52.4% vs. 39.4%) than GPT-5. Interestingly, GPT-5 makes more comments per pull request on average (1.32 vs. 0.93), but with lower precision and impact.

Many are complaining about the naming and the “Codex everywhere”. Honestly, I don’t care so much about the poor naming scheme as long as models and tools are good.

GPT-5-Codex is not available in the API but it will be soon. To use it, you will need Codex CLI, so make sure to install it:

npm i -g @openai/codex. @sama claims that GPT-5-Codex already represents ~40% of traffic for Codex.I installed and tried it (yes, haven’t done before, this is the first time for me using Codex). You can choose the model reasoning effort: prompting

/model, Codex will let you choose betweengpt-5-codex low,gpt-5-codex mediumandgpt-5-codex high. Although OpenAI recommends to leave the model_reasoning_effort at default (medium) to take the most advantage of the more dynamic reasoning effort.Along with the model, they also provided more updates:

- Codex runs in a sandboxed environment with network access (opens in a new window) disabled, whether locally or in the cloud.

- In Codex CLI, you can now resume a previous interactive session.

- Once turned on for a GitHub repo, Codex automatically reviews PRs.

- It is possible to asynchronously delegate tasks to Codex Cloud.

And more.

I think they’re heading in the right direction, actually. They’re focusing their efforts on the tools, which is good. What’s more, I have to say that I’ve reevaluated GPT5 and am using it daily instead of Claude. That’s why I appreciate and welcome these new releases.

Last but not least, Codex is open-source!

- Permalink

Qwen team released two new models: Qwen3-Next-80B-A3B-Instruct and Qwen3-Next-80B-A3B-Thinking. Both are already present on HuggingFace. Qwen also published a post on their blog.

Compared to the MoE structure of Qwen3, Qwen3-Next introduces several key improvements: a hybrid attention mechanism, a highly sparse Mixture-of-Experts (MoE) structure, training-stability-friendly optimizations, and a multi-token prediction mechanism for faster inference.

Both models are based on the Qwen3-Next-80B-A3B-Base model, which only activates 3 billion parameters per token. Qwen 3 Next is an ultra-sparse MoE with 512 experts, combining 10 routed experts and 1 shared experts. Also, it’s based on a hybrid architecture, composed by Gated DeltaNet + Gated Attention.

They say Qwen3-Next-80B-A3B-Instruct approaches their 235B flagship, and Qwen3-Next-80B-A3B-Thinking seems to outperform Gemini-2.5-Flash-Thinking.

Qwen 3 Next natively supports context lengths of up to 262,144 tokens, but they even validated it on context lengths of up to 1 million tokens using the YaRN method. YaRN is supported by

transformers,vllmandsglang. - Permalink

Apple presented the iPhone Air, the thinnest iPhone ever. This is the only new release from Apple that got my interest during their presentation event.

Its design is interesting: the entire logic board and A19 Pro chip are compacted into the camera bump (which includes both front and rear cameras). This iPhone is all battery and screen. IMHO, it seems like a strategic move for the coming years, for which this iPhone Air will serve as an experiment or a launchpad for ultra-thin devices, or simply as a research and development testbed for similar designs that enable powerful yet ultra-compact technologies.

Remarkable factor, iPhone Air has A19 Pro, which is Apple’s latest SoC. More in detail: it is built on TSMC’s N3P process node, and benefits from a 20% increase in transistor density compared to its predecessor, the N3E node, according to a 2023 IEEE study on semiconductor scaling. The A19 Pro features a six-core CPU with two high-performance cores and four efficiency cores, and 5-core GPU. Each GPU core has its own Neural Accelerators, which Apple claimed allows for MacBook Pro-level performance in an iPhone. On the new iPhone Pro, they are even more powerful. If the M5 chip will get this GPU upgrade… well, NVIDIA should start to feel some pressure.

To summarize: local AI to the Max. Next year, I want local LLMs on my phone.

- Permalink

Yesterday, a lot of npm packages have been compromised with malicious code. Following, a list of affected packages:

- ansi-styles@6.2.2

- debug@4.4.2 (appears to have been yanked as of 8 Sep 18:09 CEST)

- chalk@5.6.1

- supports-color@10.2.1

- strip-ansi@7.1.1

- ansi-regex@6.2.1

- wrap-ansi@9.0.1

- color-convert@3.1.1

- color-name@2.0.1

- is-arrayish@0.3.3

- slice-ansi@7.1.1

- color@5.0.1

- color-string@2.1.1

- simple-swizzle@0.2.3

- supports-hyperlinks@4.1.1

- has-ansi@6.0.1

- chalk-template@1.1.1

- backslash@0.2.1

and more, I think. I suggest to read the original post published on aikido.dev[1] and related HN discussion[2], both links are reported below.

All packages appear to contain a piece of code that would be executed on the client of a website, which silently intercepts crypto and web3 activity in the browser, manipulates wallet interactions, and rewrites payment destinations so that funds and approvals are redirected to attacker-controlled accounts without any obvious signs to the user (as shared from Aikido).

You can run grep or rg to check if your codebase has been impacted – thanks to sindresorhus for this suggestion:

rg -u --max-columns=80 _0x112fa8This one requires ripgrep, but you can do the same with

grep(ripgrep its Rust equivalent redesign).My thoughts about this: dependency hell is real and these are the results. I agree with Mitchell Hashimoto when he says that npm should adopt some strategies to mitigate these risks, such as rejecting all dependencies tha have less than 1k LoC. I mean, let’s just avoid using external packages to determine if an object can act like an array.

Also, I would like to share one insight reported by DDerTyp on HN:

One of the most insidious parts of this malware’s payload, which isn’t getting enough attention, is how it chooses the replacement wallet address. It doesn’t just pick one at random from its list. It actually calculates the Levenshtein distance between the legitimate address and every address in its own list. It then selects the attacker’s address that is visually most similar to the original one. This is a brilliant piece of social engineering baked right into the code. It’s designed to specifically defeat the common security habit of only checking the first and last few characters of an address before confirming a transaction.

Needs a little bit of more investigation, for which I don’t have enough time, but looks interesting.

[1] Original post

- Permalink

I decided to change the template and layout of this site. I often find myself writing short notes very quickly during the day, which don’t always fit the traditional blog post format, since they are very brief. Also, I don’t always have the time to write long posts. For this reason, I modified the template to allow me to publish and share short Notes (like the one you are reading right now). Of course, I will continue to write and publish blog Posts when I have time.

On top of that, kinoroll.com no longer exists: the domain expired and I don’t intend to renew it. I preferred to move it here, onto my personal site! This way I can centralize everything and not have to manage different sites and domains. So the Kinoroll section will show the old kinoroll.com, but from now on I’ll share the links I like, along with some thoughts when possible, in the Link section, creating my own personal blogroll.

I hope my blog is easier to navigate now. I was strongly inspired by Simon Willison.

- Permalink

Yesterday, Fil-C popped up to the top of Hacker News. This time the submission got a fair amount of traction, sparking a lot of interest in the community, including a comment from Andrew Kelley. In fact, I’ve been interested in Fil-C for about a year already: my first submission on Hacker News was eight months ago. So I can say I’ve been actively following the project’s progress, also thanks to the activity of its creator, @filpizlo, on Twitter.

Fil-C is a compiler that implements the C and C++ languages with a memory-safe approach. Recently, Filip has published more documentation about the Garbage Collector and about the capabilities he calls “InvisiCaps”, which are more related to pointer safety.

Well, for me this is kind of a dream. I love the C language, it’s my favorite, but I admit I have some skill issues when it comes to memory management, though not because of the language itself, but rather due to my own code-writing proficiency, which could definitely be better. Recently, I’ve been exploring Rust and Zig precisely for this reason, and I’ve found myself appreciating Zig more than Rust because of its minimalism. Having a memory-safe implementation of C would therefore resolve a lot of the headaches caused by memory management.

Fil-C seems like the sweet spot between academic research and pragmatic work. Beyond the documentation, there’s also a list of programs already ported to Fil-C, showing that sometimes no code changes are required, and when they are, the effort is moderate.

So, the next step for me is to dig deeper into the topic and try it out myself! In the meantime, I thought it would be fair to personally share what Filip is doing, because the project deserves much more attention than it’s currently getting, imo.

- Permalink

Nothing fancy. I just dumped the Divina Commedia into a contiguous

u16slice.const path = "commedia.txt"; const buf = try tok.tokenizeFile(allocator, path); defer allocator.free(buf.data);Running it:

$ zig run src/main.zig -- commedia.txt tokens: 300682 (expected 300682) head: { 10, 32, 32, 78, 101, 108, 32, 109, 101, 122 }Just 300682 u16s waiting for an embedding matrix :)

- Permalink

There is a very interesting law that I think is worth sharing:

I will apply it more often.